Update on eBay Listing Quality Report

First I want to give some credit where it is due on this one. Harry Temkin and his team have engaged on these issues in ways I have not seen before.

From Harry joining the eBay community specifically to respond to the feedback being given, to having a team of product managers reach out for a Zoom call to review the issues with this report - this is the kind of positive engagement with sellers that eBay needs to make standard operating procedure and it is refreshing to see.

That being said, it has been almost 2 months and many of the issues that were originally raised haven’t been fixed.

There are still many examples of blatantly incorrect data, the Google Shopping insights are clearly not pulling complete accurate information in from Google, and the “opportunities” and suggestions are still not particularly relevant or helpful.

Talking with the product management team gave some interesting insight into what I have suspected for some time - there appears to be a huge gap between the people designing eBay’s seller tools and the people who actually use them.

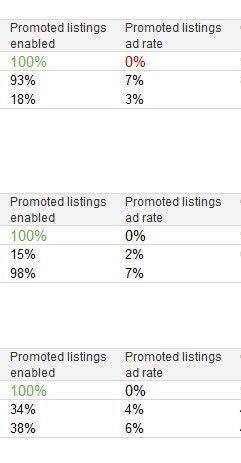

I was surprised for example that the product management team didn’t know that the minimum Promoted Listings rate eBay allows is 1%, which would have instantly told them that any report showing 100% Promoted Listings usage at an average rate of 0% was the result of bad data - it’s simply not possible for that to be accurate within the realm of how things actually work on eBay.

There also seemed to be some misunderstanding about how Good Til Canceled automatic relisting works. I raised a concern that sometimes the percentages in the report change due to the total number of listings being considered changing - my suspicion was that listings that were possibly in the GTC relist “up to 24 hour” re-indexing window were not being counted properly.

The product team didn’t understand this at first and at one point even asked if the listing number stays the same when a Good Til Canceled listing is renewed (it does) - again showing that there seems to be a real lack of practical knowledge about how exactly sellers use eBay and the tools this team is tasked with creating.

Harry’s response indicated this report was a work in progress, though there was no indication of this before the report was made available to some sellers - it was only framed that way after the issues with the report were brought to light.

This also raises concerns for me about the overall QA/QC process at eBay for new tools and features. Why were some of these issues not detected in initial testing? Is it because the testers don’t know enough about “how eBay works” on a practical level to realize there was something wrong?

If so - what is Harry Temkin as VP of Seller Experience & Tools doing about that? The community engagement and conference calls are a good first step, but longer term there needs to be a strategy in place to make sure the people designing and executing this stuff have some idea of how it will be used in practical application.

Maybe eBay can use the new eBay Experts program to pay experienced sellers to provide training to the product teams too? Win - win!

Harry said the issues being raised would be addressed between January and March, before the report is rolled out to the masses. It’s now March and while I have seen some improvements, I also still see a lot of work to be done.

Hopefully eBay can pull this one off - I think this kind of reporting could be really helpful for sellers, if it provides accurate data and relevant actionable insights.

please keep the opt out for SD open. i sell small 35mm slides often 1.99. i charge 85p post. i will loose all my sales if people are expected to pay 2.70 post and i will make a loss . can you reassure me? thanks Ed